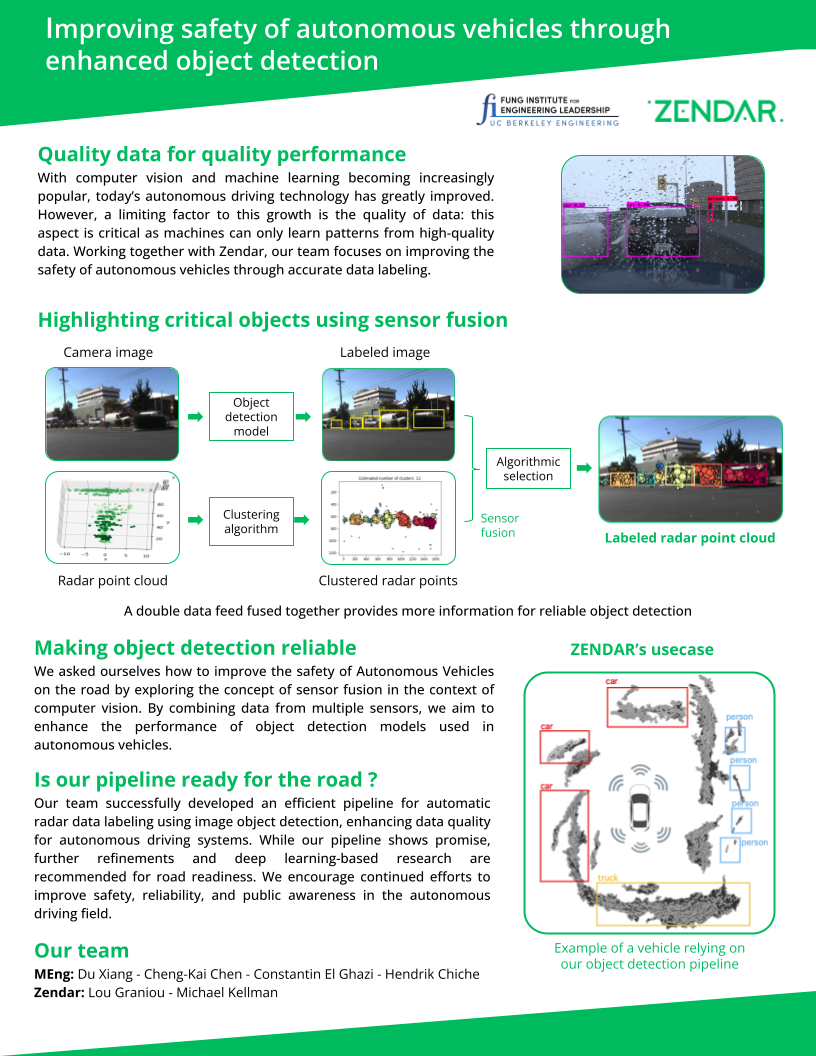

Revolutionizing Autonomous Driving Software For Safer Object Detection

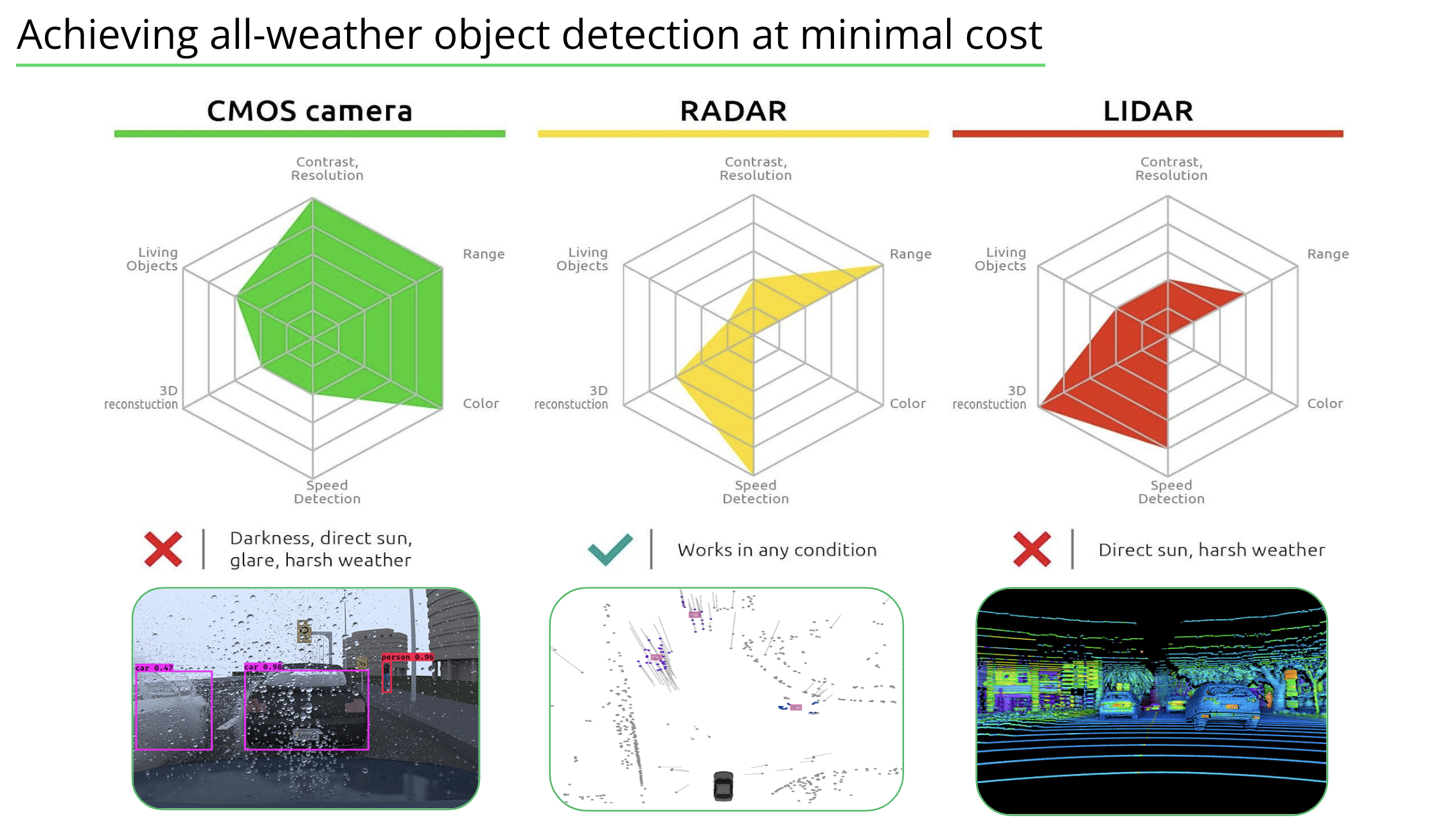

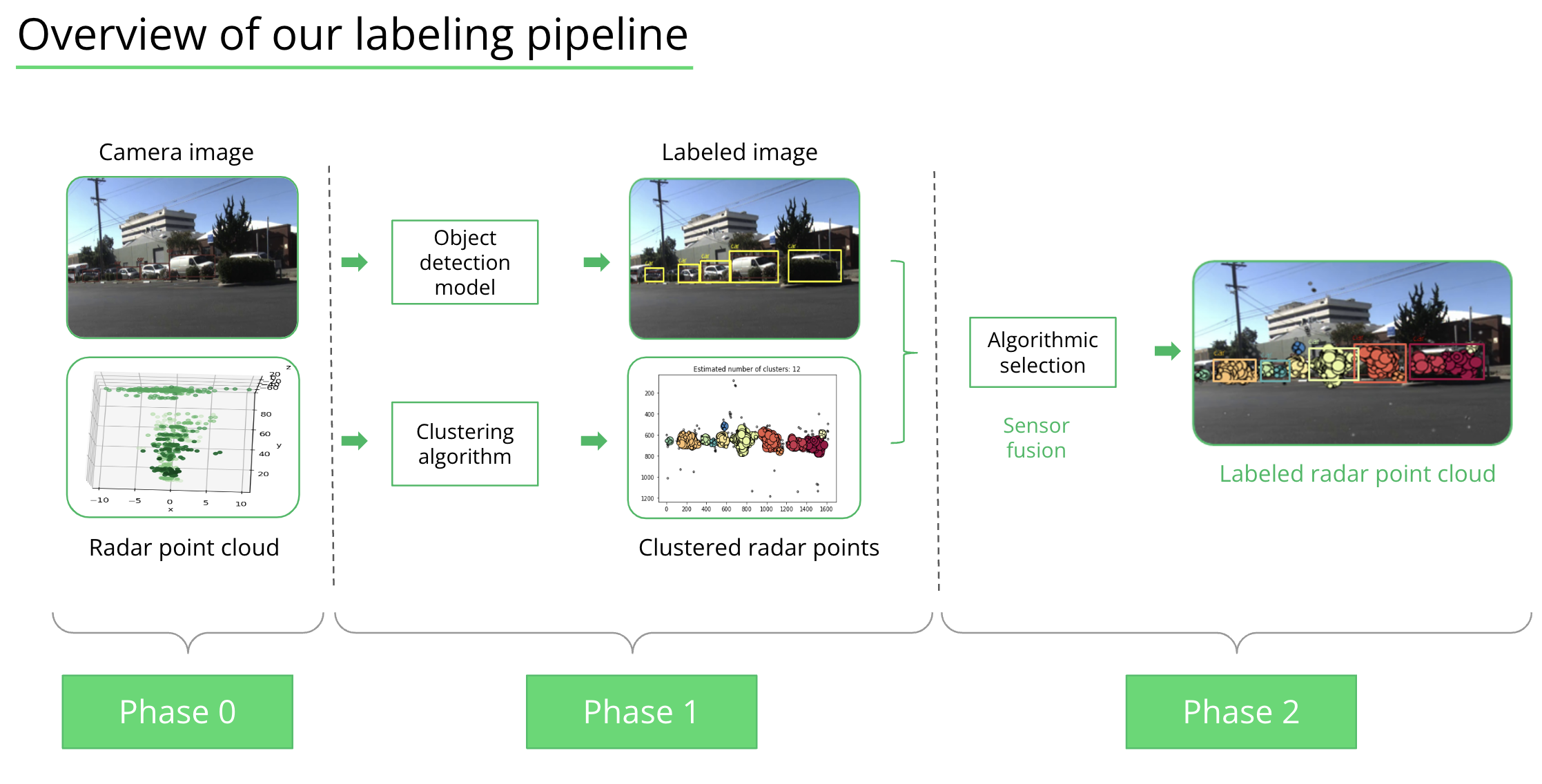

Welcome to the project website! This project focuses on improving object detection and localization using radar and image data in complex environments. Here, you can explore the various aspects of our end-to-end pipeline, including coordinate transformation, YOLO object detection, DBSCAN clustering, and merging radar clusters with image labels.

Overview

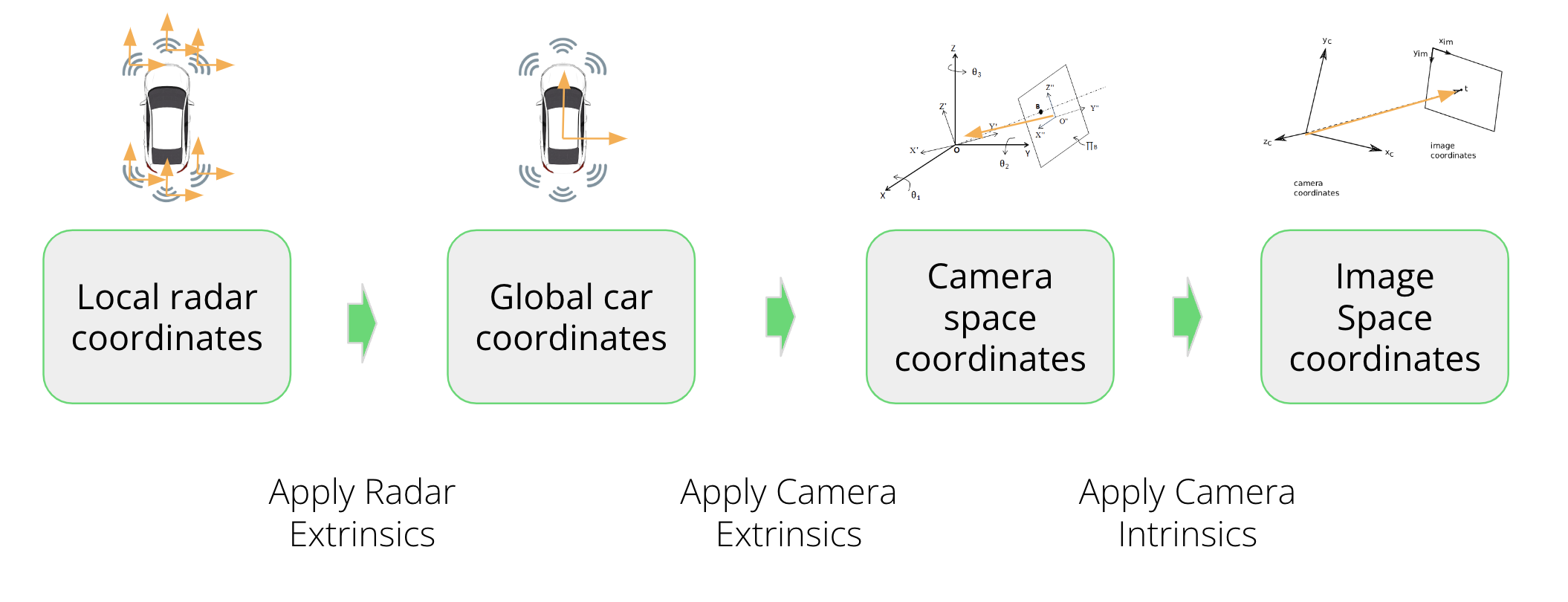

Coordinate Transformation

Converting radar data from its native coordinate system to a coordinate system aligned with camera data.

YOLO Object Detection

A real-time object detection algorithm that simultaneously predicts object classes and bounding boxes.

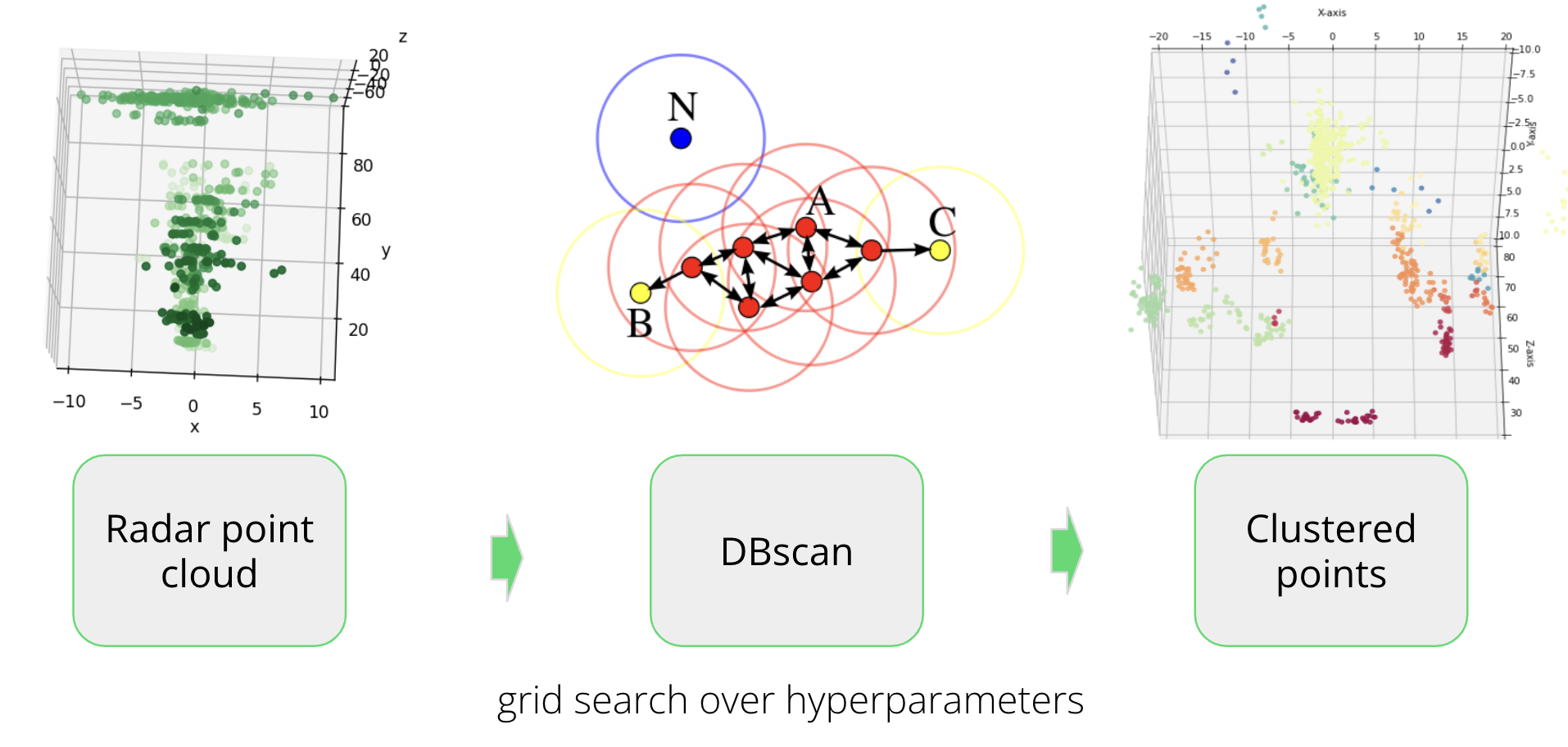

DBSCAN Clustering

A density-based clustering algorithm used for unsupervised machine learning, grouping data points based on distance.

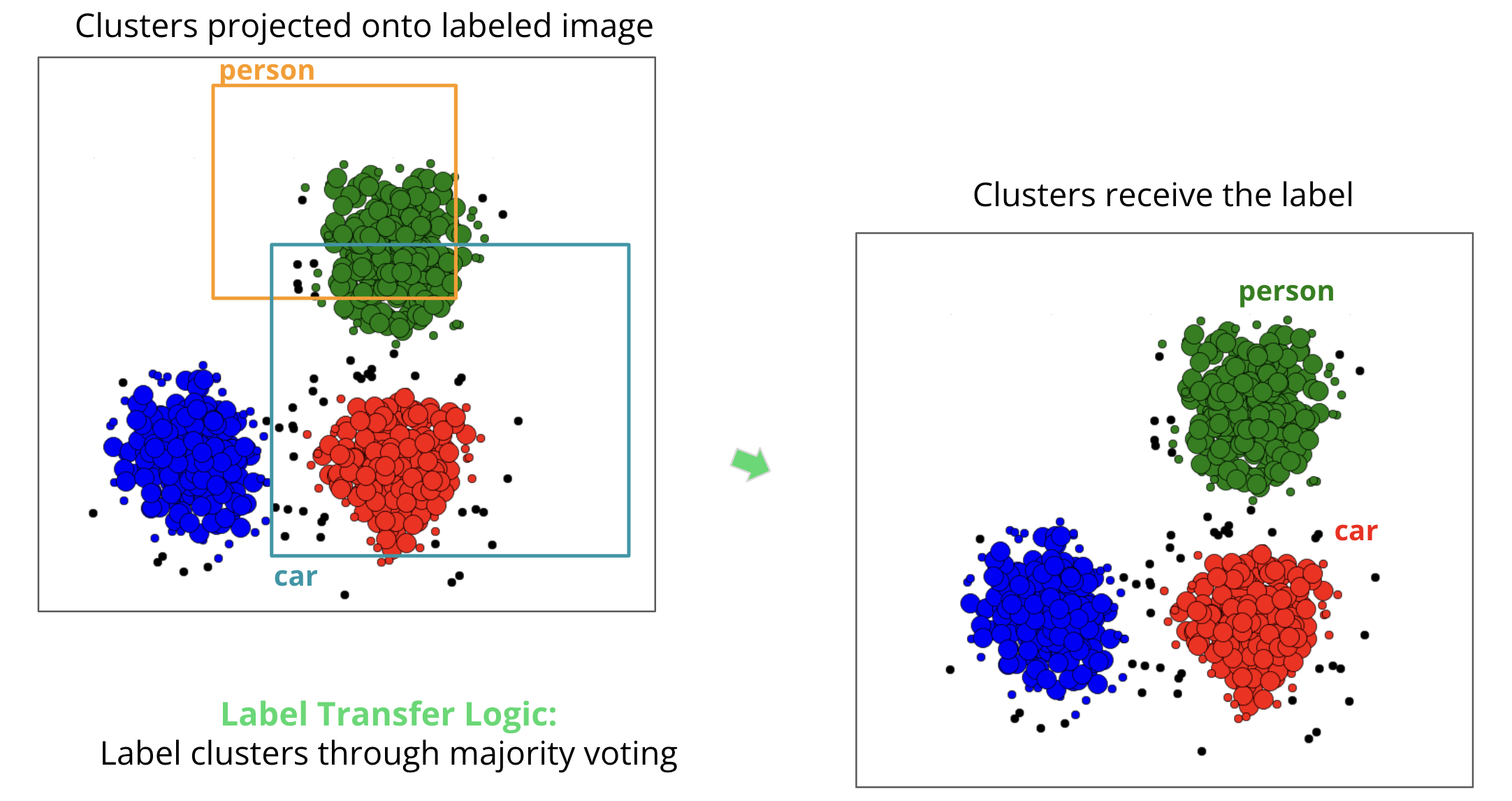

Merging Radar Clusters & Image Labels

Combining radar point data clusters with object labels generated by the YOLO algorithm for the image data.

For more details about each step, please see the sections below.

Motivation & Benifits of Automatic Radar Labeling

- Data fusion validation: By labeling objects detected in both radar point cloud and image data, you can validate the accuracy and consistency of the data fusion process. This can help to ensure that the combined data is reliable and useful for various downstream tasks, such as object tracking, path planning, or decision-making.

- Better understanding of object properties: Associating radar point cloud data with image-based object detections allows for a richer understanding of the properties of detected objects. For example, you can gain insight into an object's position, velocity, and distance (from radar data) and its appearance, shape, and texture (from image data). This can be especially useful for tasks such as object classification or behavior prediction.

- Improved object detection performance: By focusing on objects detectable by both radar and camera, you can improve the overall object detection performance of your system. The fusion of these two modalities can help to confirm and validate the presence of objects in the scene, leading to more accurate and reliable detection results. Additionally, it can help to reduce false positives and negatives.

- Enhanced safety and reliability: In autonomous driving and advanced driver assistance systems (ADAS), safety and reliability are paramount. By labeling radar point cloud data that is also detected by the camera, you can provide an additional layer of redundancy and validation for the object detection system. This can contribute to a safer and more reliable driving experience.

Pipeline & Results

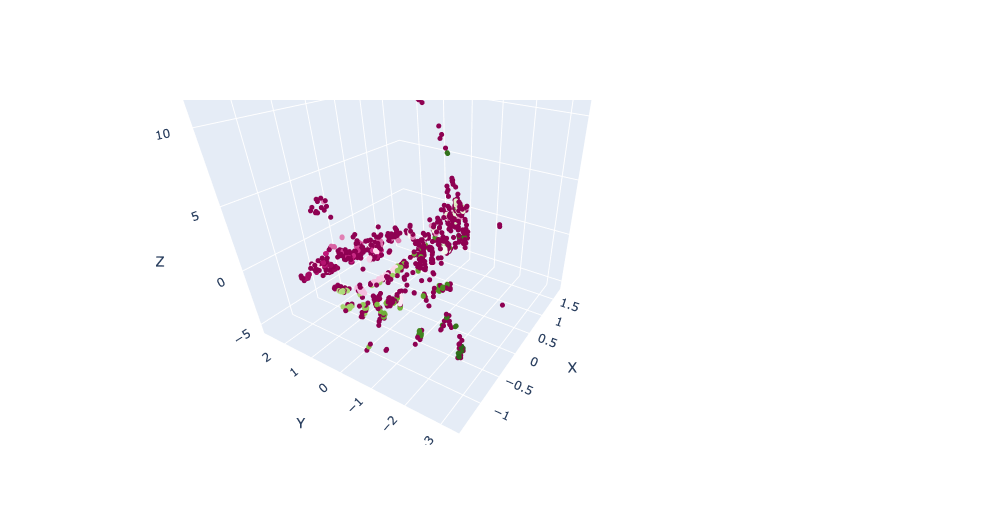

1. Coordinate Transformation

Coordinate transformation involves converting radar data from its native coordinate system (radar space) to a coordinate system that is aligned with the camera data (camera space). This process allows for the integration of information from multiple sensors to improve the accuracy of object detection and localization in complex environments.

Radar in native 3D coordinate

↓

Projection to 3D vehicle coordinate

↓

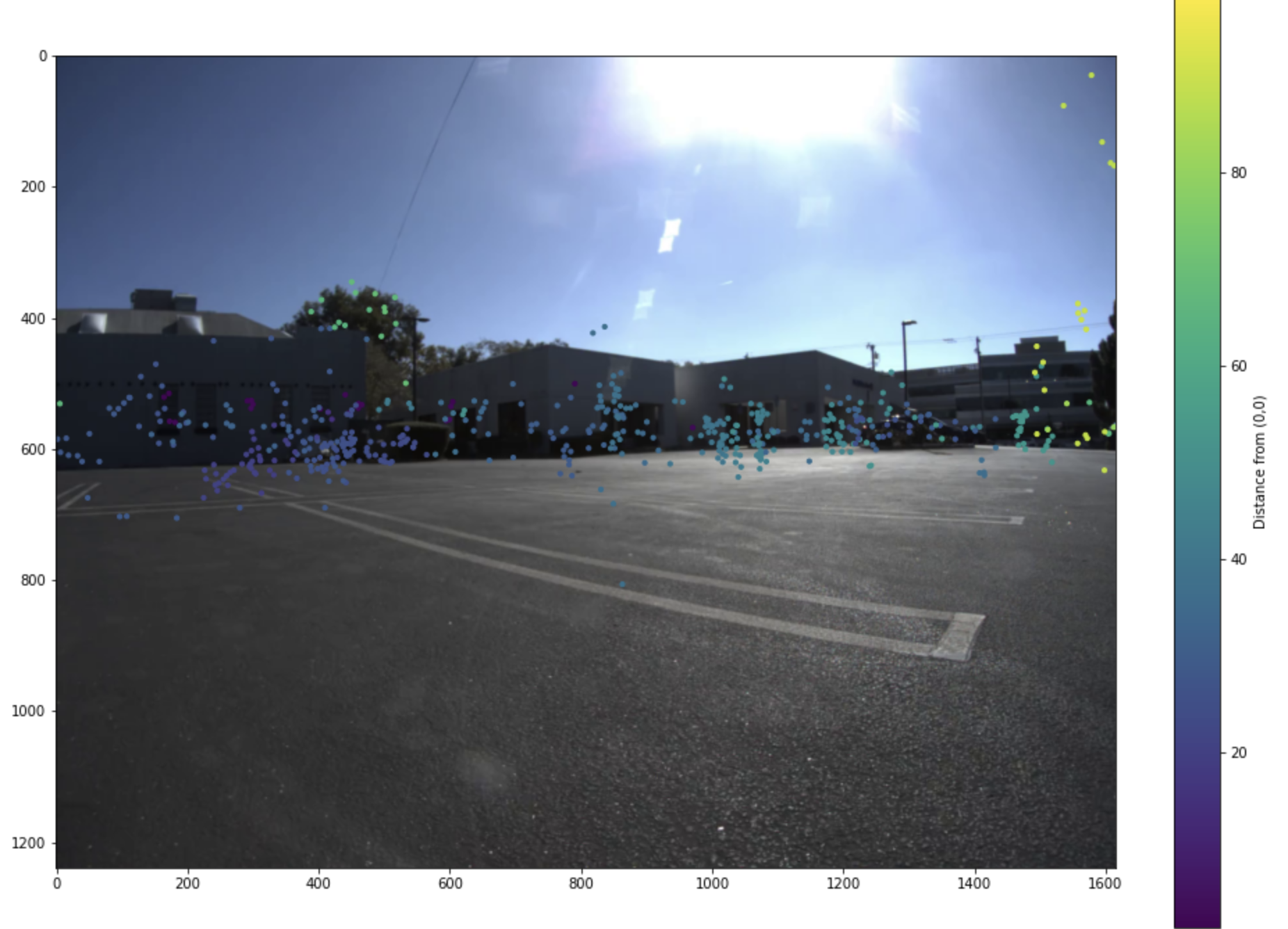

Projection onto 2D left image

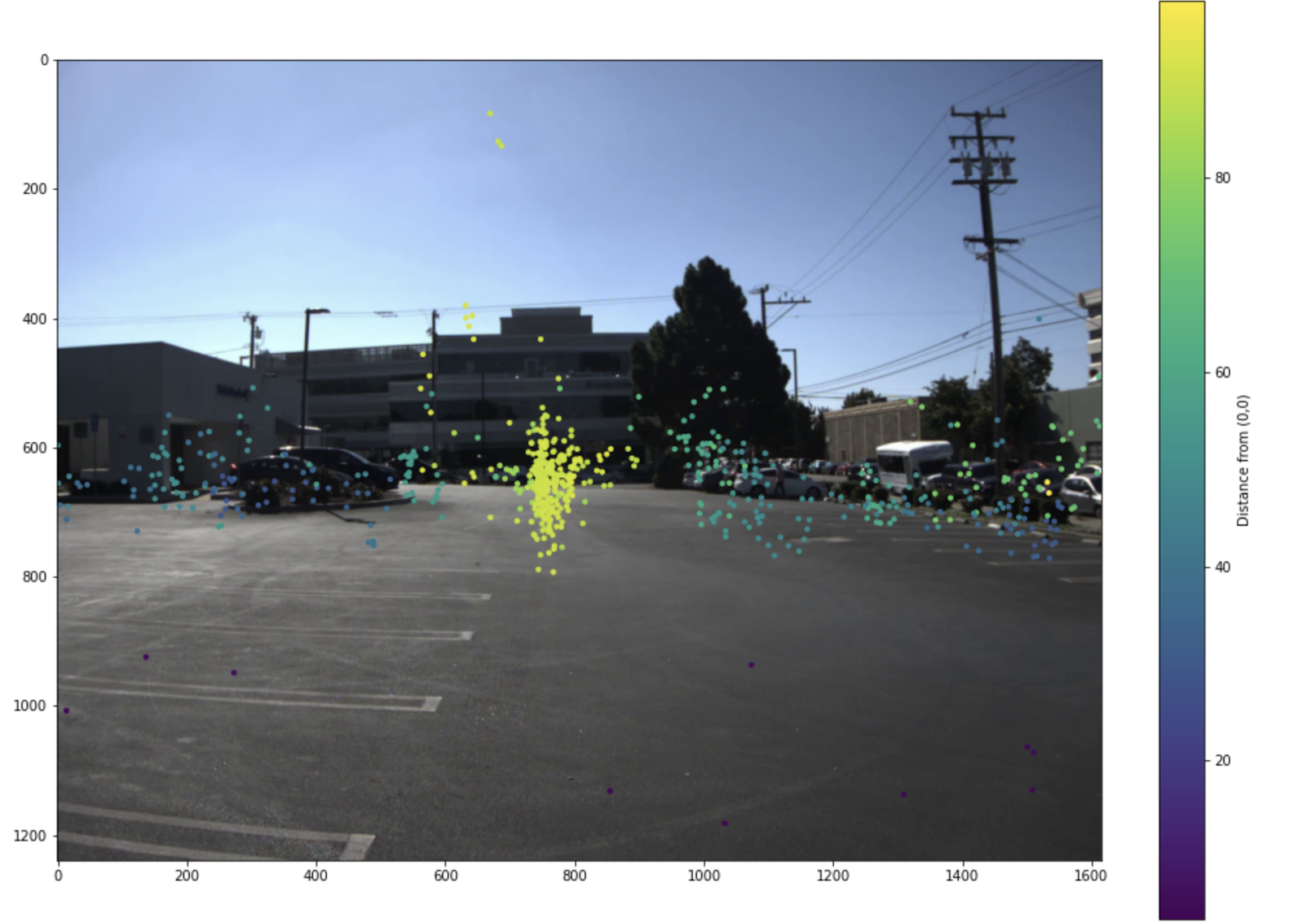

Projection onto 2D middle image

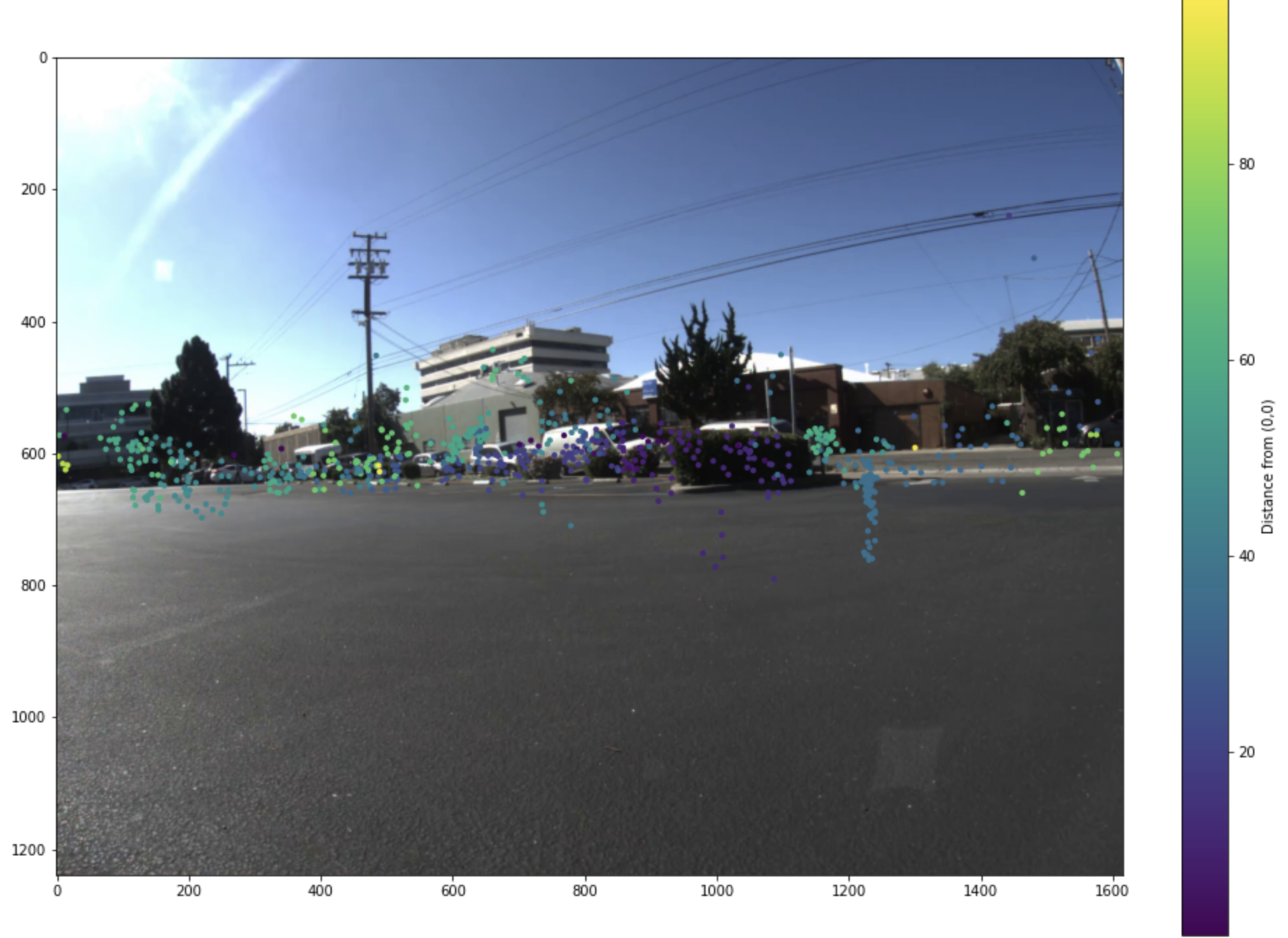

Projection onto 2D right image

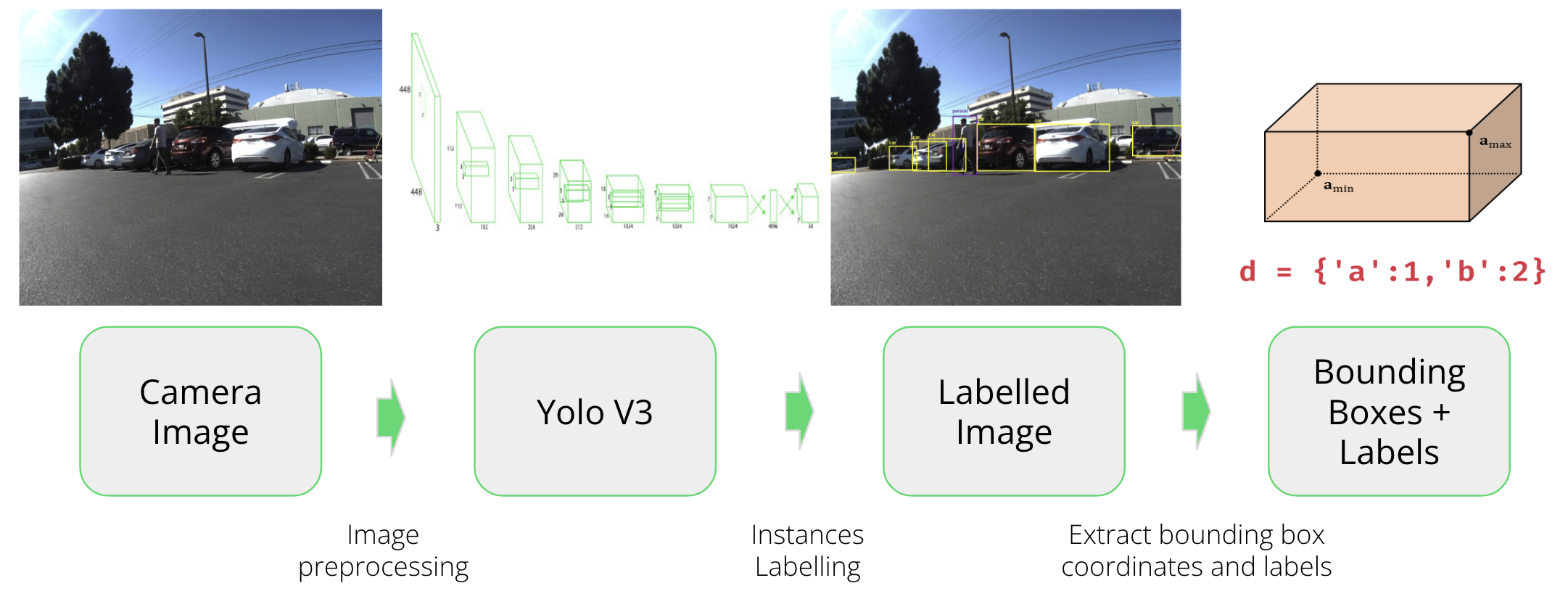

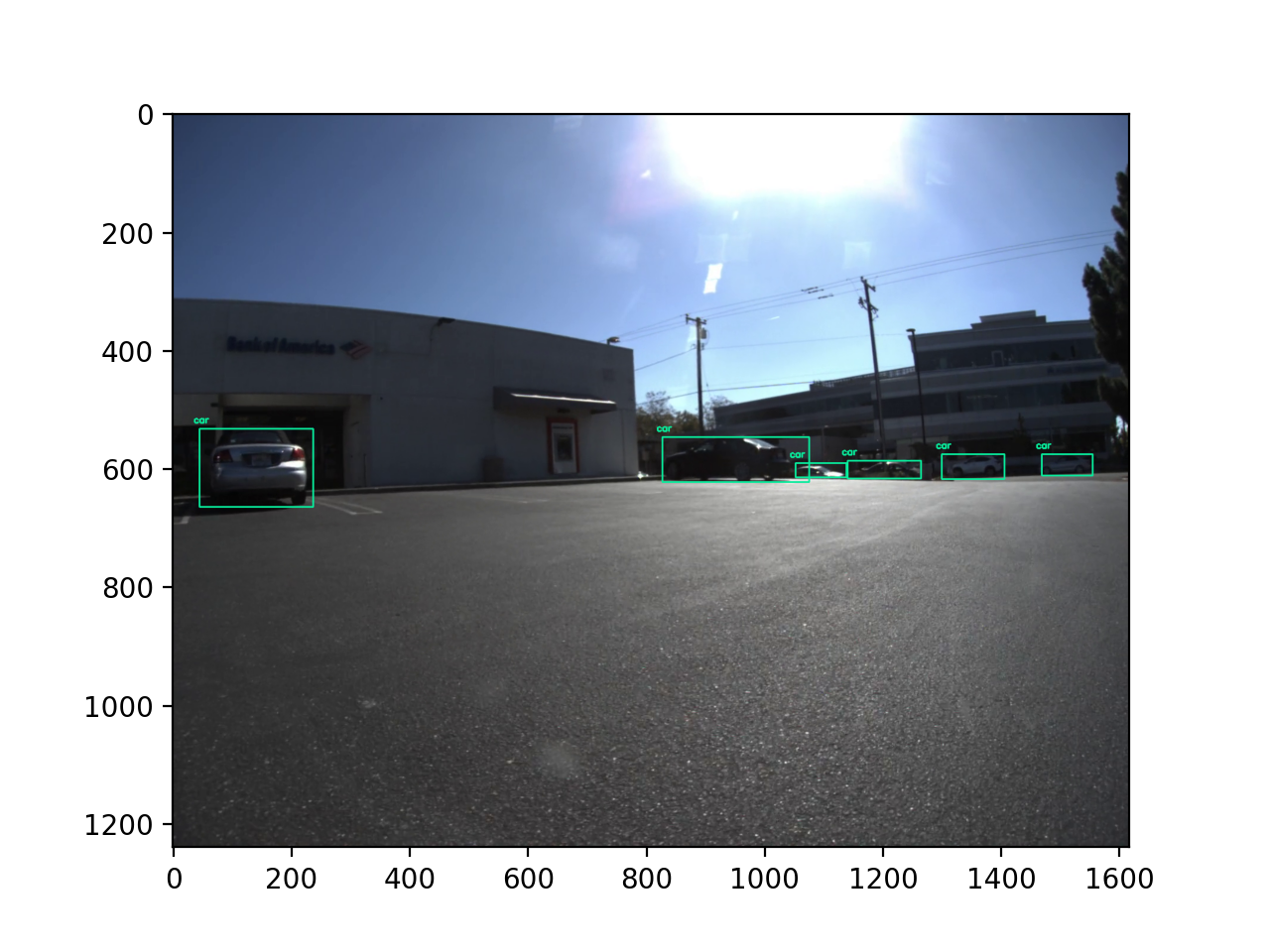

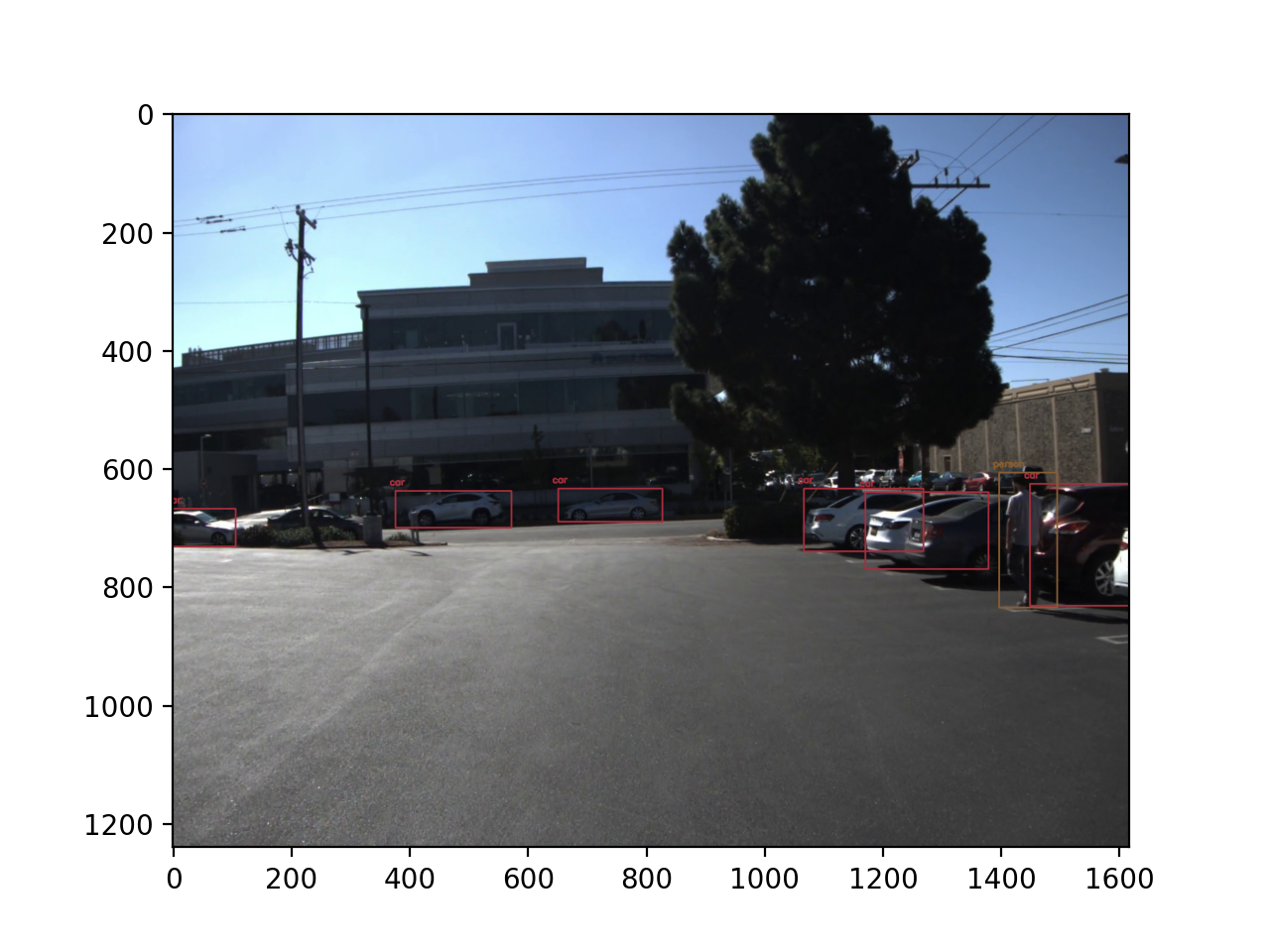

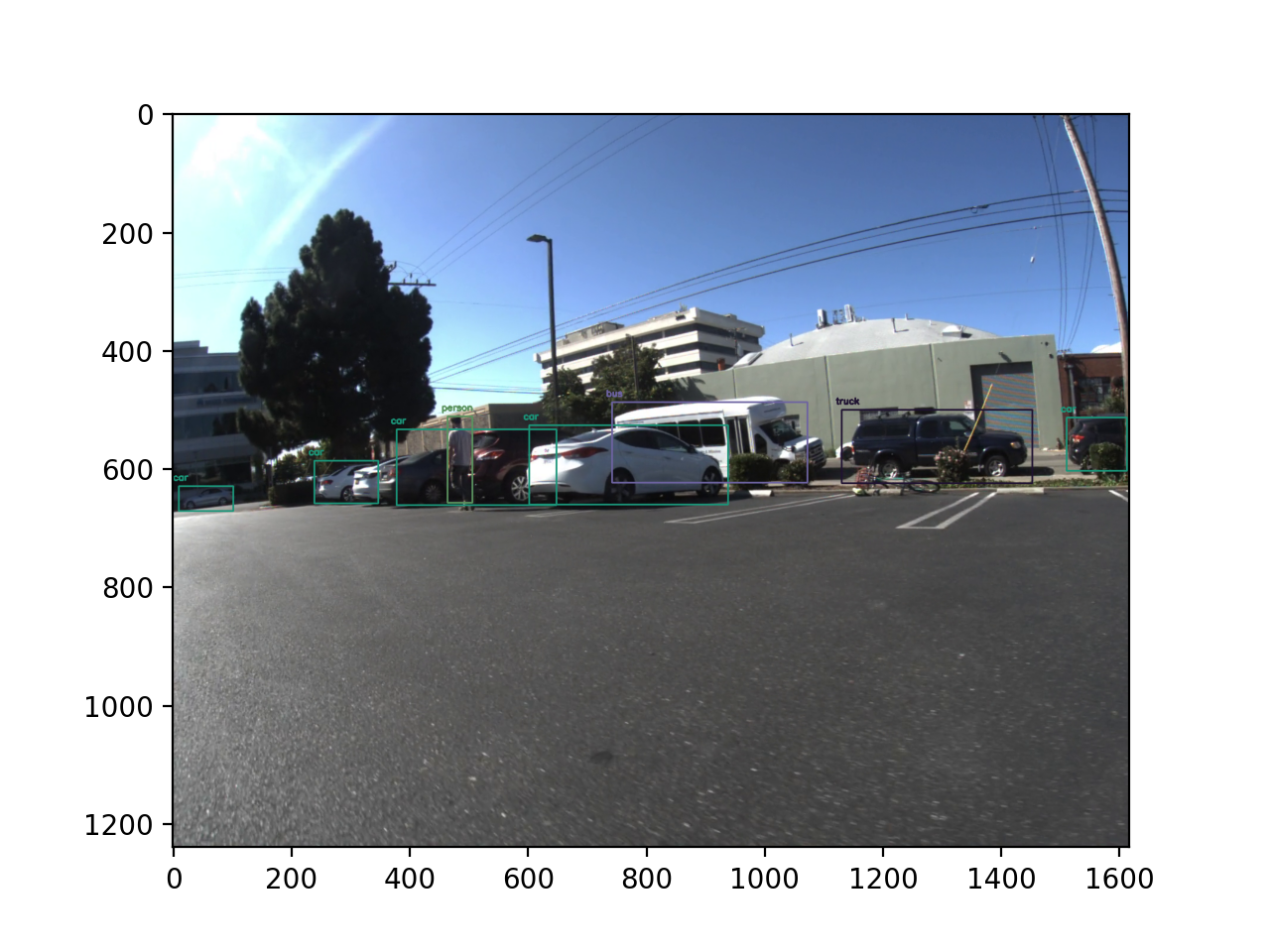

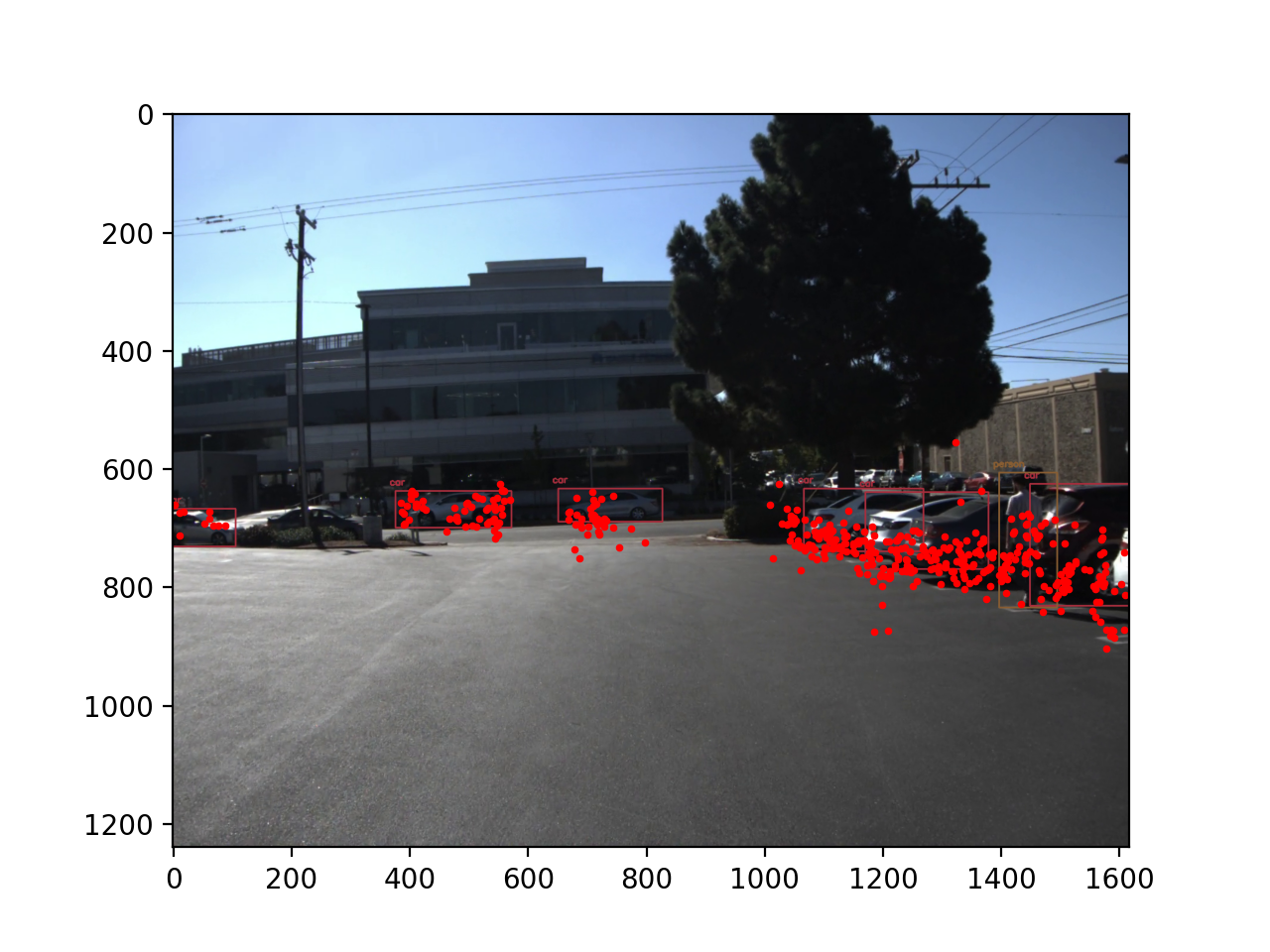

2. YOLOv3 Object Detection

YOLO (You Only Look Once) is a real-time object detection algorithm. It leverages a single neural network to simultaneously predict object classes and bounding boxes for those objects in input images. The algorithm is trained using a large dataset of labeled images and backpropagation to update the weights of the neural network.

Left Camera YOLO Results

Middle Camera YOLO Results

Right Camera YOLO Results

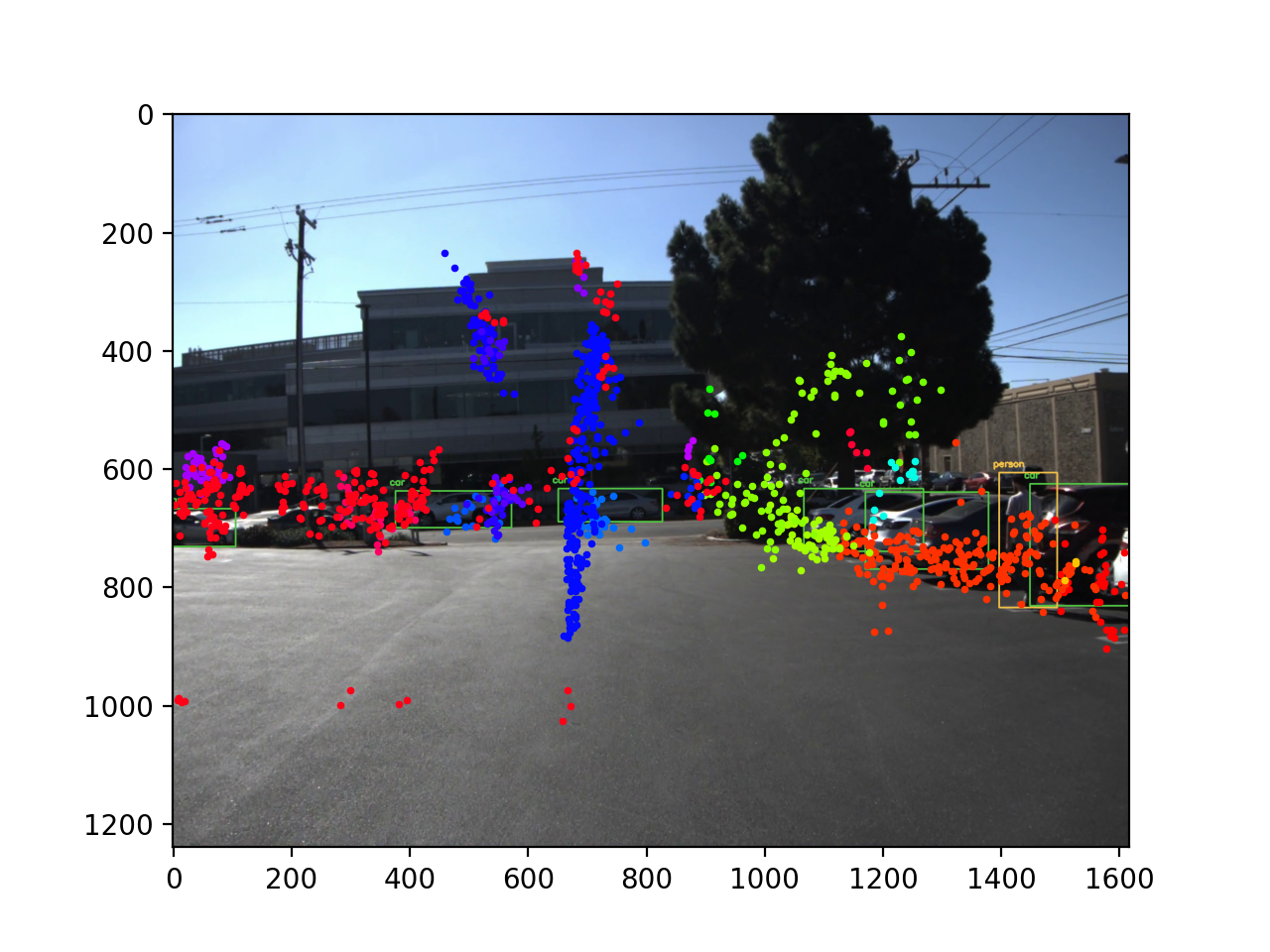

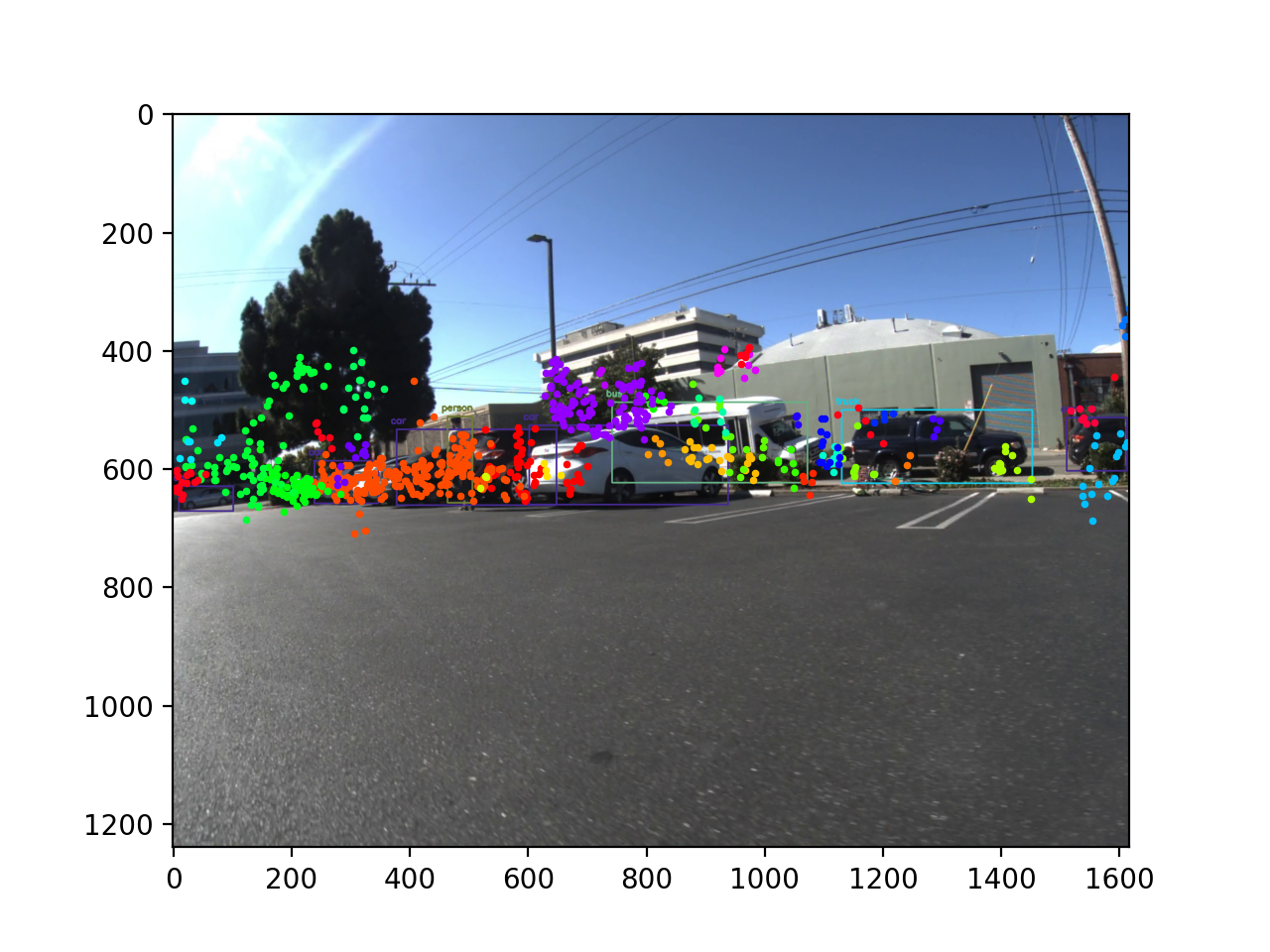

3. DBSCAN Clustering

DBSCAN (Density-Based Spatial Clustering of Applications with Noise) is a clustering algorithm used for unsupervised machine learning. It groups together data points that are close to each other in feature space and separates data points that are far away. The algorithm identifies clusters based on their distance from each other and recursively adds nearby points that meet the epsilon and minimum points criteria until no more points can be added to the cluster.

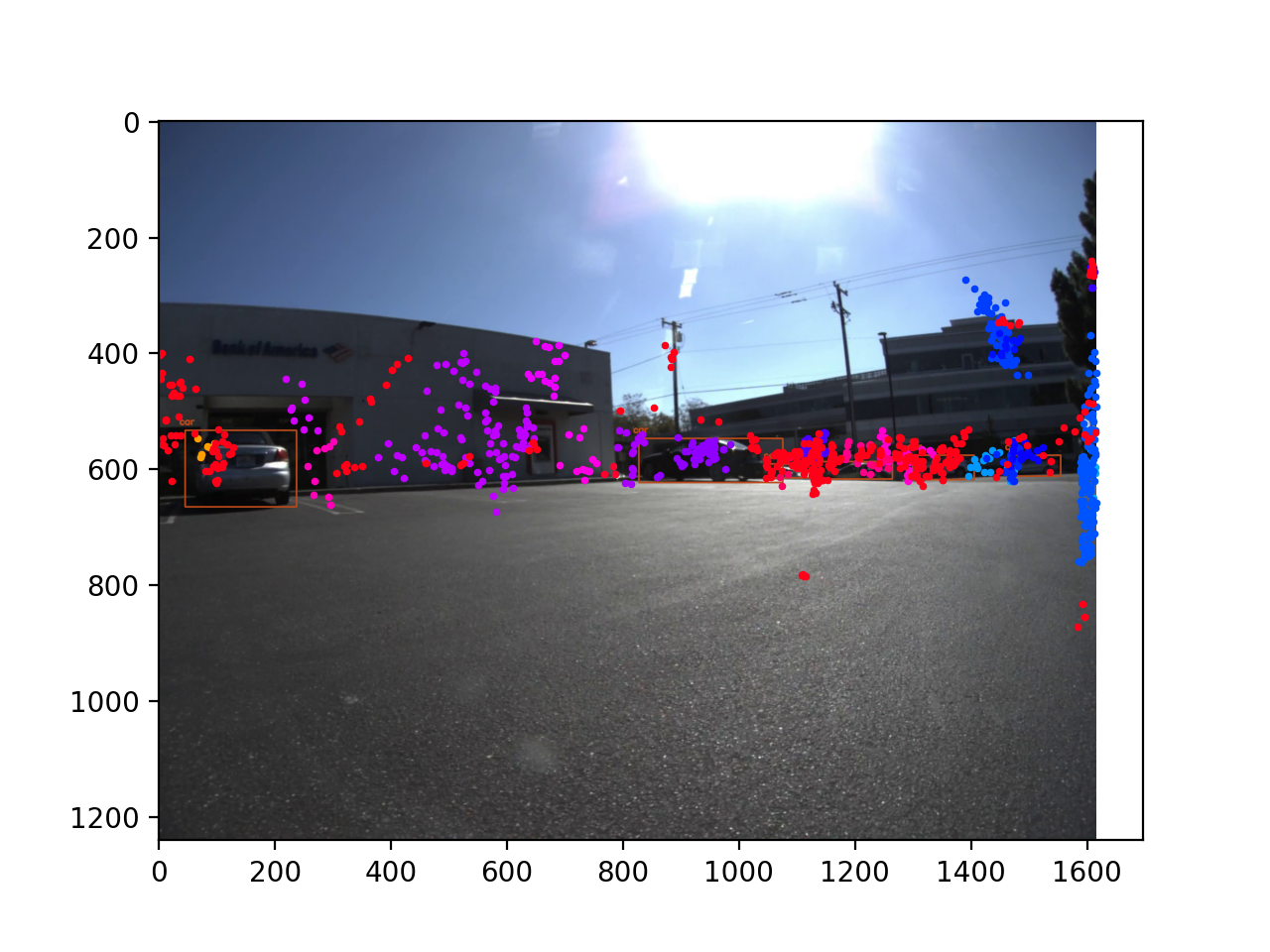

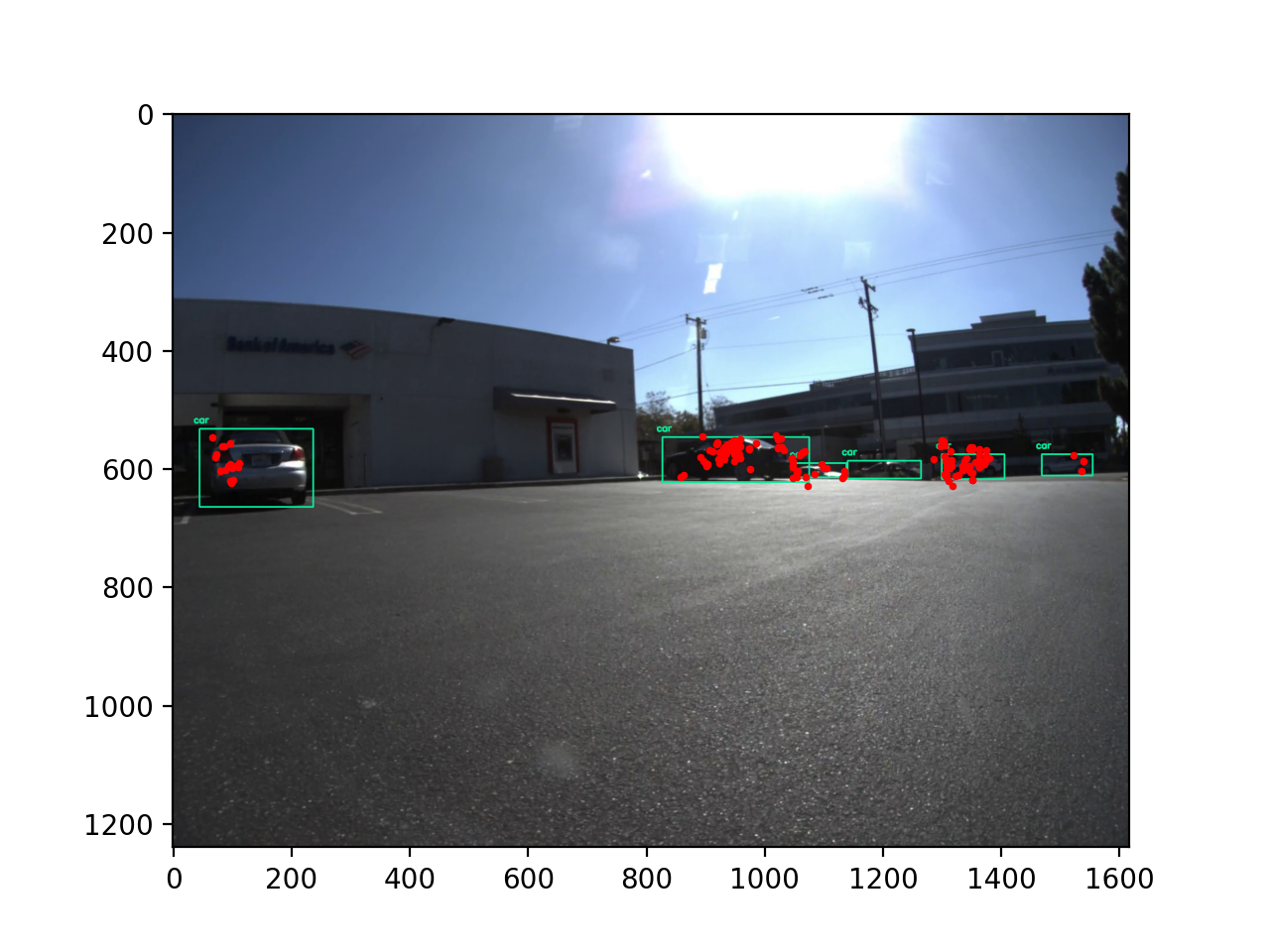

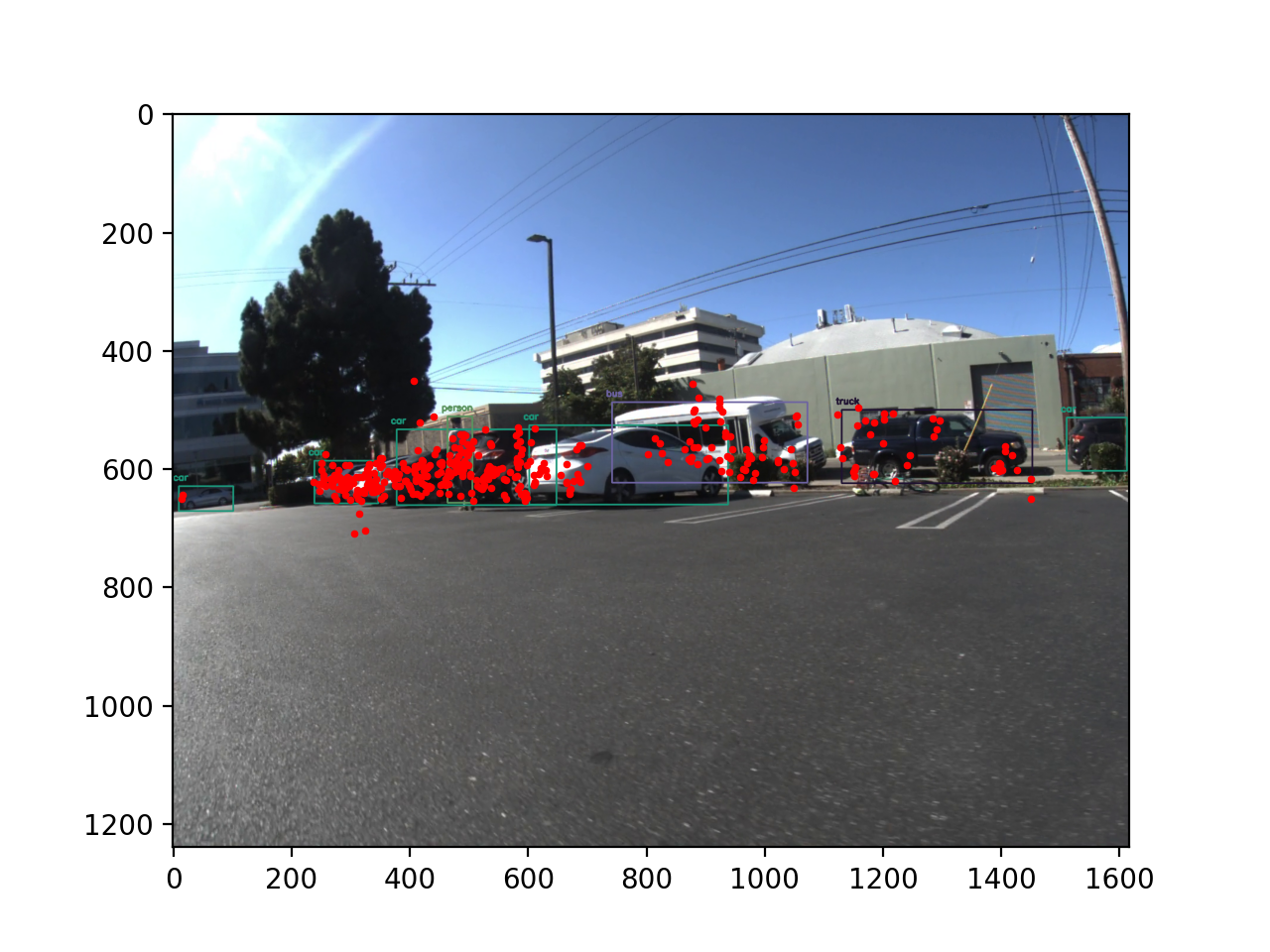

4. Merging Radar Clusters and Image Labels

The final step of the pipeline is to combine the results generated by the clustering and labeling algorithm. Radar point data clusters obtained from the DBSCAN algorithm are merged with object labels generated by the YOLO algorithm for the image data. This combination is accomplished by utilizing the overlapping bounding boxes between the radar points and image labels as a means of matching the results.

Before Merge

After Merge

Left Camera Clustering Results

Left Camera Labeling Results

Middle Camera Clustering Results

Middle Camera Labeling Results

Right Camera Clustering Results

Right Camera Labeling Results

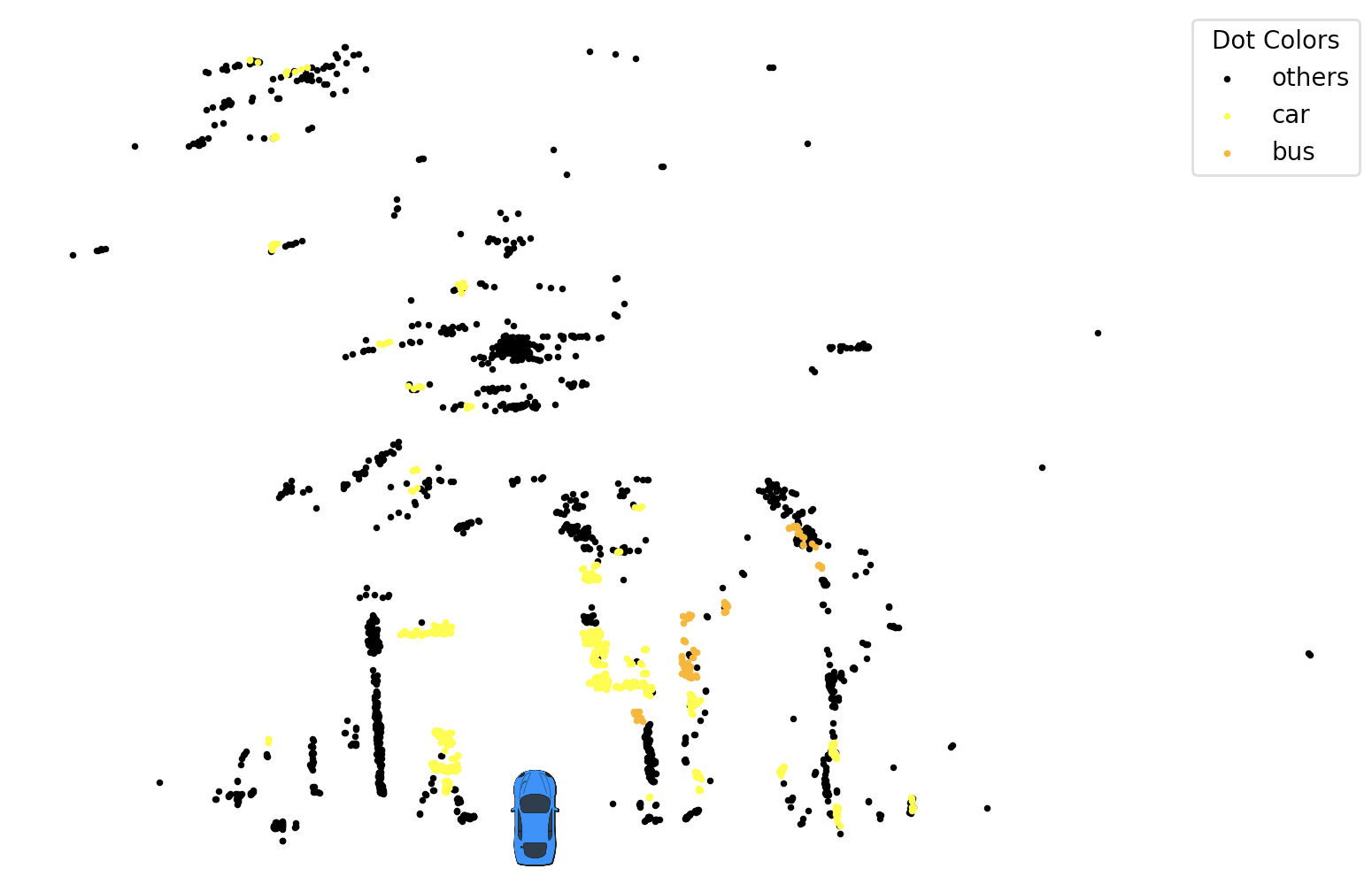

5. Final Birds Eye View with Labeled Data

In the end, once we have radar data labeled in 3D, we are able to show the labeled point cloud relative to the vehicle's position.

Below is an example of the visualization.

Point Cloud Labeled Bird Eye View

6. Software & Demo Video

Limitations

While our end-to-end pipeline has shown promising results in object detection and localization, it is important to acknowledge some limitations and potential areas for improvement:

- Sensor limitations: The accuracy of our system largely depends on the quality and resolution of the input data from radar and image sensors. Low-quality sensors or noisy data can negatively affect the performance of the pipeline.

- Algorithm limitations: The YOLO object detection algorithm and DBSCAN clustering algorithm have their inherent limitations. For instance, YOLO may struggle to detect small or partially occluded objects, and DBSCAN is sensitive to the choice of hyperparameters, which can impact clustering quality.

- Computational complexity: Real-time processing of radar and image data requires significant computational resources. The pipeline may experience performance issues on low-end hardware or when handling large datasets.

- Generalization: Our pipeline's performance on diverse and complex environments is yet to be fully evaluated. More extensive testing and validation are required to ensure the robustness of the system in a variety of real-world scenarios.

In future iterations of the project, we plan to address these limitations by refining our algorithms, incorporating more advanced sensor technologies, and optimizing the pipeline for better computational efficiency. The preliminary results demonstrate the potential of our approach in improving object detection and localization, and we believe further development can lead to more accurate and robust systems for autonomous driving applications.